Xpeng IRON shows physical AI is here. See what 82 DOF means, where near-term ROI lives, and get a 90-day plan to pilot humanoids with agentic swarms.

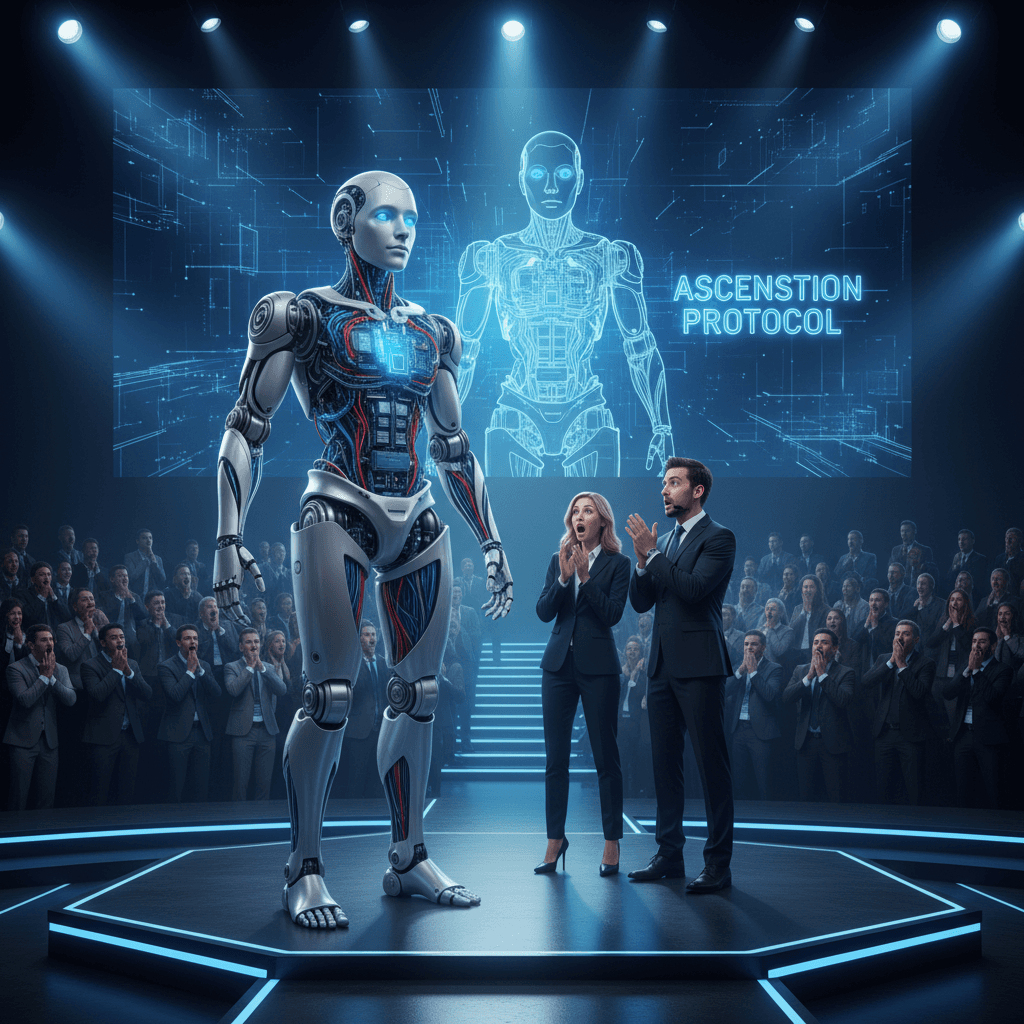

In a moment made for Q4 headlines, the Xpeng IRON robot took the stage—and then got cut open live. For a few seconds the audience wasn't sure if it was watching a person. The shock wasn't just the spectacle. It was a signal: the Xpeng IRON robot marks a new phase of "physical AI" where embodied systems stop looking like prototypes and start behaving like co‑workers.

Why does this matter now? As 2025 closes and teams lock 2026 budgets, leaders face a choice: treat humanoids and agentic AI as hype—or use them to solve grinding operational gaps in logistics, retail, and service. This guide unpacks what's real, what's rumor, and where the near‑term ROI lives.

You'll get a plain‑English breakdown of the "cut‑open" demo and its 82 degrees of freedom, a field guide to physical AI use cases, and what the latest model chatter (GPT‑5.1, Grok 5, Gemini 3.0) and swarm techniques mean for your stack. We'll finish with a 90‑day plan you can run before year‑end.

Why Xpeng's IRON Robot Shook the Room

The demo worked because it reframed humanoids from research curiosities to production‑adjacent machines. Lifelike gait, balanced posture, and humanlike micro‑motions trigger our social wiring. When an on‑stage teardown revealed motors, linkages, and cabling where "muscle" should be, the message was unmistakable: this isn't theater. It's engineering.

Three takeaways from the moment:

- The optics were deliberate. If robots can pass at a glance, front‑of‑house use cases move from awkward to acceptable.

- The build quality is maturing. Tighter tolerances, clean cable routing, and compact actuators suggest supply chains are catching up.

- The road to ROI shifts to integration. The next wins won't come from showmanship—they'll come from workflow design, safety cases, and data loops.

Physical AI isn't about replacing people—it's about giving your software hands, feet, and sensorimotor feedback so it can deliver outcomes in the real world.

What "Cut Open" and 82 DOF Really Mean

"Degrees of freedom" (DOF) are the controllable axes in a mechanism—think shoulder pitch, yaw, and roll counted separately. An 82‑DOF humanoid doesn't mean 82 independent brains; it means many joints that must coordinate as one.

DOF vs. control quality

- High DOF enables expressiveness: fine hand poses, compliant grasps, and stable walking over irregular floors.

- Real capability comes from control stacks: whole‑body control, model predictive control, and visual servoing that blend vision, proprioception, and force feedback.

- Expect redundancy: multiple joints may cooperate to achieve a single end‑effector goal while maintaining balance and avoiding self‑collision.

Sensors and "physical AI"

Inside a modern humanoid you'll typically find:

- Vision: stereo or depth cameras for SLAM and object pose estimation.

- Tactile and force: joint torque sensors, fingertip pressure for safe grasping.

- Inertial: IMUs for balance and fall prevention.

- Edge compute: on‑board processors for perception and control loops with millisecond latency.

"Physical AI" is the fusion of these sensors with learned policies and planning. Think of it as the real‑world extension of generative AI: instead of generating text, the system generates force, motion, and reliable contact with messy environments.

Safety, ethics, and the humanoid form

Humanoid design buys compatibility with spaces built for people—stairs, door handles, carts, buttons. It also raises issues:

- Deception risk: If a robot can pass for human at a glance, standards for labeling and disclosure become essential.

- Social expectations: People infer intent from posture and gaze; design teams must tune motion to avoid misinterpretation.

- Fail‑safe behavior: Power loss, fall scenarios, and "stop" semantics need clear, tested protocols.

Physical AI ROI: Where It Delivers First

Humanoids aren't a silver bullet. But paired with agentic software, they can unlock specific workflows that used to stall automation projects.

Near‑term sweet spots

- Back‑of‑house logistics: tote moves, shelf restocks, box breakdown, cycle counts during off‑hours.

- Manufacturing support: kitting, material handling, tool fetch/return between cells without re‑architecting lines.

- Retail and hospitality: guided wayfinding, inventory checks, overflow cleaning, event setup/teardown.

- Facilities: routine inspection (lights, leaks, temperature anomalies) with photo/video evidence into your ticketing system.

What not to automate yet

- High‑speed precision tasks with tight takt times in open production lines.

- Safety‑critical interactions without ample containment and supervision.

- Workflows with ambiguous responsibility that invite "automation surprise."

A realistic pilot pattern

- Scope: 1 site, 1 shift, 2 repeatable tasks with clear success metrics.

- Targets: 15‑30% time reclaimed for human staff or measurable error reduction.

- Integration: connect to WMS/TMS/CMMS via APIs and use simple prompts for task orchestration.

- Data: capture video + logs to refine policies weekly; plan a hard ROI review at week 8.

Tools and Trends: Models, Swarms, and Skills

The model rumor mill won't quit—GPT‑5.1, Grok 5, and Gemini 3.0 are all being discussed. Regardless of naming, version bumps typically bring three things that matter for operations:

- More reliable tool use: better function calling and structured outputs reduce brittle glue code.

- Longer context and memory: fewer hand‑offs between subtasks, cleaner agent plans.

- Safer autonomy: improved refusal handling and constraint following for on‑prem tasks.

Treat the headlines as a nudge to upgrade your orchestration, not a reason to pause. The bigger unlock right now is architectural: ensembles and swarms.

Swarm AI: small models, big wins

Fortytwo‑style "Swarm AI" points to a cost‑effective pattern: many lightweight models and agents collaborating with routing, voting, and role specialization.

- Use small models for classification, retrieval, and monitoring.

- Reserve frontier models for reasoning bottlenecks only.

- Add a coordinator agent to verify steps, enforce constraints, and trigger physical actions.

Result: lower latency and cost with better fault isolation—exactly what you want before pairing software with a humanoid.

Skills: train the team, not just the model

A simple, structured course like Google's AI Essentials can upskill non‑technical staff fast. Focus on:

- Writing task‑centric prompts that specify inputs, outputs, tools, and guardrails.

- Reviewing model outputs with checklists and confidence thresholds.

- Logging failures and iterating weekly like a product team.

Workforce reality check

You've seen the headlines about tech layoffs and role realignment. The pragmatic move is to pair automation pilots with reskilling:

- Map at‑risk tasks to higher‑value roles in QA, customer experience, and automation oversight.

- Establish a "human in the loop" policy and publish it internally.

- Incentivize continuous learning tied to operational metrics, not vanity badges.

How to Act Now: A 90‑Day Physical AI Plan

Days 0‑30: Identify and de‑risk

- Pick three candidate tasks using the 3R test: repetitive, rules‑bound, and risky/undesirable for humans.

- Validate safety: define zones, emergency stops, and monitoring.

- Shortlist vendors: compare humanoids to mobile manipulators and cobots; pick form factor based on space, not hype.

- Define success: time saved, error rate, and incident thresholds. Create a single spreadsheet owner.

Days 31‑60: Pilot and integrate

- Stand up a sandbox: connect to your WMS/CMMS/Helpdesk with read‑only access.

- Build an agent layer: prompt templates for tasks, tool lists, and escalation rules.

- Run supervised shifts: one operator can oversee two robots; log every exception.

- Review weekly: fix the top 3 failure modes; freeze scope creep.

Days 61‑90: Prove and plan

- Quantify ROI: labor hours reclaimed, throughput lift, defect reduction, downtime avoided.

- Decide scale or shelve: if targets missed, write a post‑mortem and pivot tasks.

- Draft policy: disclosure (no deceptive anthropomorphism), data retention, safety events.

- Budget 2026: allocate for 1‑2 additional sites, training seats, and MLOps for on‑prem edge models.

Conclusion: From Spectacle to Systems

The Xpeng IRON robot demo wasn't just a stunt—it was a timestamp. Humanoids with 80+ DOF, real sensors, and tight control loops are moving from labs to line items. The winners in 2026 will pair agentic software, swarm architectures, and clear governance to put physical AI to work fast and safely.

If you're ready to move, start a 90‑day pilot, upskill your team, and document your guardrails. For deeper playbooks and weekly workflow drops, sign up for our free daily newsletter, join our community for hands‑on tutorials, and explore the AI Fire Academy for advanced automation workflows. Your competitive edge will come from execution—begin now.

In one sentence: the Xpeng IRON robot shows that physical AI is here; your next step is to turn that reality into repeatable, auditable outcomes.