Choose the right LLM design for real work. Compare dense, MoE, and hybrid stacks, then turn architecture into faster, cheaper, higher‑quality output.

Modern LLM Architecture Comparison for Productivity

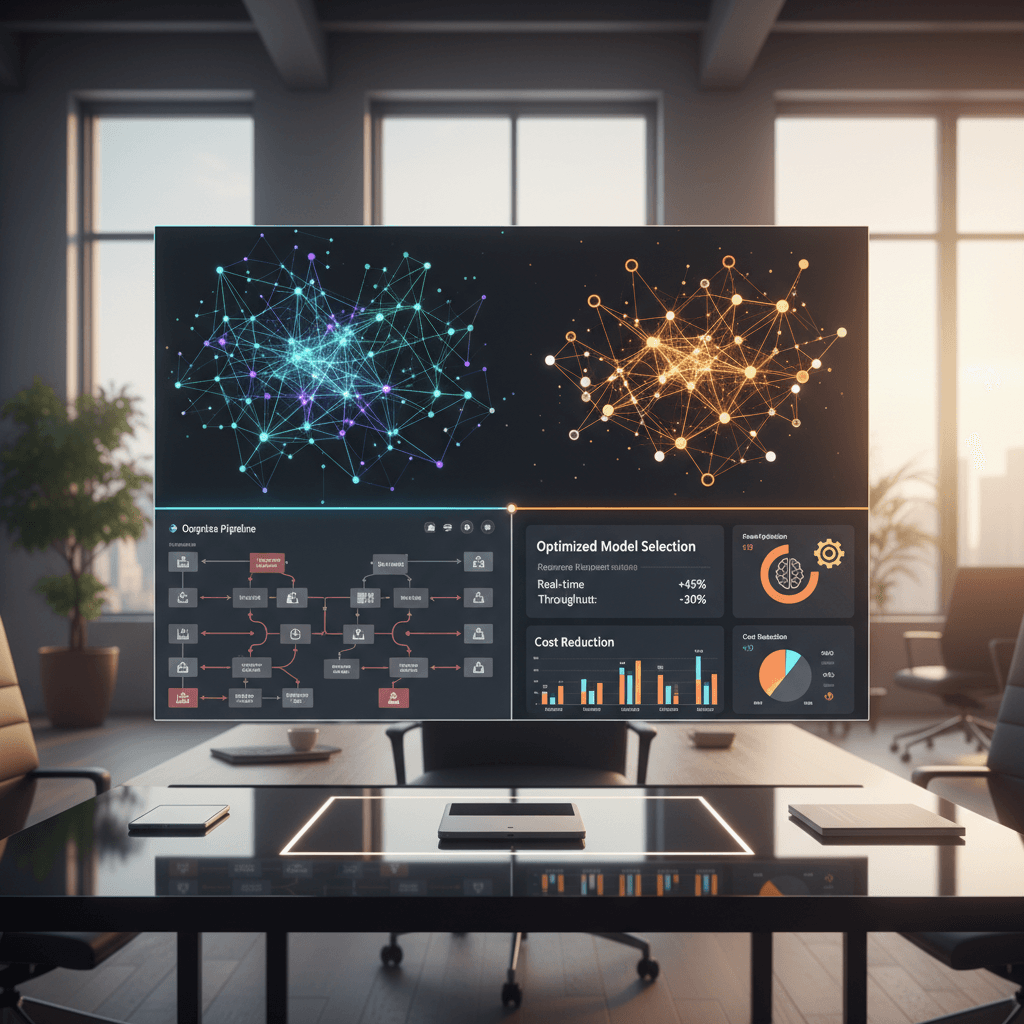

As 2025 winds down and teams plan next year's stack, one question keeps surfacing: which large language model architecture will actually make work faster? This LLM architecture comparison cuts through the hype to show how design choices—from dense Transformers to mixture‑of‑experts and retrieval‑first stacks—translate into concrete gains in cost, speed, and output quality.

Models spanning the spectrum—from research-heavy MoE systems like DeepSeek‑V3 to long‑context assistants such as the Kimi series (including K2)—reflect a broader shift: modern AI isn't just about raw capability, it's about fit-for-purpose productivity. In our AI & Technology series, we focus on turning technical trends into daily wins, so you can work smarter, not harder—powered by AI.

Below, you'll get a practical map of current LLM architecture design, how it impacts your workflow, and a simple decision framework to choose the right model for your use case.

Why LLM Architecture Matters for Everyday Work

The fastest way to unlock productivity is to align architecture with workload. A dense, general-purpose model might dazzle in demos, but your team's needs—document processing, code assistance, analytics, customer support—demand specific strengths.

- Dense models shine at broad generalization and single-stream quality.

- MoE (mixture‑of‑experts) models excel at throughput and scale by activating only a subset of parameters per token.

- Retrieval‑aware stacks reduce hallucinations and keep costs stable on knowledge-heavy tasks by pulling facts from your data.

These choices aren't academic. Architecture determines latency, total cost of ownership, and the reliability of answers your team can trust. A thoughtful LLM architecture comparison up front can save months of trial-and-error and thousands in compute.

Dense vs Mixture‑of‑Experts vs Hybrid: What's Changing

Modern LLM design clusters around three families: dense Transformers, sparse MoE, and hybrid approaches that blend routing, retrieval, and specialized heads.

Dense Transformers

Dense models keep all parameters "on" for every token. They tend to:

- Deliver strong single-sample quality and consistent behavior

- Be easier to fine-tune for niche tasks

- Consume more compute per token, especially at long context lengths

When to choose dense:

- High-stakes reasoning where consistency matters

- Smaller-scale deployments where simplicity beats maximum throughput

- Teams planning domain fine-tunes (e.g., legal, medical, policy)

Mixture‑of‑Experts (MoE)

MoE introduces sparse routing—only a few experts (sub-networks) activate per token, enabling tremendous scale without proportional runtime cost. Systems like DeepSeek‑V3 exemplify this trend toward expert specialization and efficient compute use.

Benefits you'll notice at work:

- Higher tokens-per-second per dollar for batch-heavy workloads

- Competitive quality driven by specialization of experts

- Better scaling characteristics for large teams and multi-tenant traffic

Watchouts:

- More complex serving (routing, load balancing, caching)

- Quality can vary across domains without careful data and fine-tuning

Hybrid and Long‑Context Assistants

Many assistants pair dense or MoE backbones with long-context optimizations, retrieval, and tool calling. The Kimi series (including K2) reflects this shift toward assistants designed to ingest large documents, keep context coherent, and ground responses in external knowledge.

Common hybrid ingredients:

- Long-context attention optimizations (e.g., sliding windows, efficient KV cache)

- Retrieval-augmented generation (RAG) and reranking for factual grounding

- Function calling and tool-use for calculations, web forms, or analytics

Bottom line: Dense favors simplicity and consistency; MoE drives throughput and cost efficiency; hybrids wrap either backbone with retrieval and tools to boost reliability on real-world, document-heavy work.

Retrieval, Tools, and Orchestration: The Real Productivity Stack

The base model is only half the story. Teams that see the biggest productivity gains pair the right backbone with a well-architected inference and data layer.

Retrieval‑Augmented Generation (RAG)

RAG grounds the model in your knowledge base to reduce hallucinations and keep responses current without retraining. Key components:

- Chunking and embeddings tuned to your content types (PDFs, tickets, code)

- A vector index plus a reranker to keep only the most relevant passages

- Lightweight guardrails to enforce citation, structure, and tone

When RAG is enough:

- Policies, product catalogs, help center articles, internal wikis

- Fast-changing facts where fine-tuning would quickly go stale

Tool Use and Function Calling

Let the model call calculators, analytics engines, or internal APIs. Use cases:

- Sales/finance: quote building, margin checks, forecasting

- Support: troubleshooting flows, warranty checks, returns

- Engineering: test generation, dependency lookups, CI actions

Orchestration Patterns

- Router models: route requests by intent to different backbones (dense vs MoE)

- Planner-executor: a small model plans steps; a larger model executes reasoning

- Multistage quality: draft with a fast model; refine with a higher-quality model

These patterns deliver tangible productivity: lower latency for routine tasks, and higher reliability for complex ones.

Multimodal and Long Context: Designing for Real Documents

Modern work is multimodal: screenshots, spreadsheets, PDFs, meeting audio, and code all collide in a single task. Architectures are evolving to make this seamless.

Multimodal Models

Vision-text and audio-text models let teams:

- Extract tables from invoices or receipts

- Summarize meetings and produce action items

- Interpret charts and generate narratives for dashboards

Tip: For structured documents, pair multimodal extraction with a schema-aware post-processor to guarantee JSON or tabular output your systems can trust.

Long-Context Strategies

Long-context settings (hundreds of thousands to millions of tokens) help with:

- Full-document QA for contracts, RFPs, or research reports

- Project handovers and large codebase comprehension

But context isn't free. Evaluate:

- Memory footprint and KV cache size vs. batch throughput

- Attention strategies that avoid quadratic blowups

- Summarization and map-reduce patterns to control cost

In practice, many teams combine moderate context windows with smart retrieval and summarization to hit the sweet spot on speed and spend.

A Practical Framework to Choose the Right Model

Use this simple decision path to match architecture to workload.

- Define the job-to-be-done

- Content creation and editing

- Analytics and reporting

- Customer support and service

- Code assistance and DevOps

- Prioritize constraints

- Latency: real-time chat vs. async processing

- Cost: tokens/day, peak vs. average load

- Privacy: on-prem, VPC, or strict data retention

- Pick the backbone

- Dense: consistent reasoning, easier fine-tunes

- MoE: high throughput, better $/token

- Hybrid: retrieval and tools for grounded, dynamic tasks

- Tune the stack

- Start with prompt patterns and structured output schemas

- Add RAG for factuality; include reranking and guardrails

- Fine-tune or LoRA if you need consistent tone or domain nuance

- Validate before you scale

- Create a small but representative eval set (50–200 items)

- Track accuracy, latency, and cost per task—not just per token

- Add human review checkpoints where risk is high

Implementation Playbook: From Pilot to Production

Turn architecture choices into day-one productivity wins with a focused rollout.

30‑Day Pilot

- Week 1: Define two priority workflows; instrument baseline time/cost

- Week 2: Prototype prompts + RAG on a dense or MoE model; add tool calls

- Week 3: Build evaluation set; compare draft→refine orchestration vs single-pass

- Week 4: Lock guardrails, set SLAs, and document handoff process

Production Hardening

- Observability: log prompts, responses, retrieval hits, and tool calls

- Cost controls: batch where possible, cache frequent prompts, right-size context

- Quality gates: schema validation, fallback routing, human-in-the-loop for edge cases

Example Outcomes to Target

- 40–70% faster document responses by using retrieval + reranking

- 2–3x team throughput by routing routine tasks to a faster MoE tier

- Fewer escalations via tool use (calculations, policy checks) embedded in flows

Your exact numbers will vary, but the pattern is consistent: architecture + orchestration beats model choice alone.

Work Smarter, Not Harder — Powered by AI. In this AI & Technology series, our goal is to turn the complexity of LLM design into simple, repeatable wins for your team.

Conclusion: Make Architecture Your Competitive Edge

If you remember one thing from this LLM architecture comparison, let it be this: align the model to the job and let your stack do the rest. Dense models bring stable reasoning, MoE delivers scale, and hybrid stacks with retrieval and tools make answers trustworthy.

Next steps:

- Map your top three workflows to the decision framework above

- Run a 30‑day pilot with clear success metrics and guardrails

- Standardize orchestration patterns that convert AI potential into daily productivity

The teams that win 2026 budgets will be the ones who turn architecture into outcomes. Which workload will you optimize first?