Teleoperation is cracking the robot training data bottleneck. Learn how to deploy robots and AI agents for speed without sacrificing quality—safely and at scale.

Why Robot Training Data Just Became Everyone's Priority

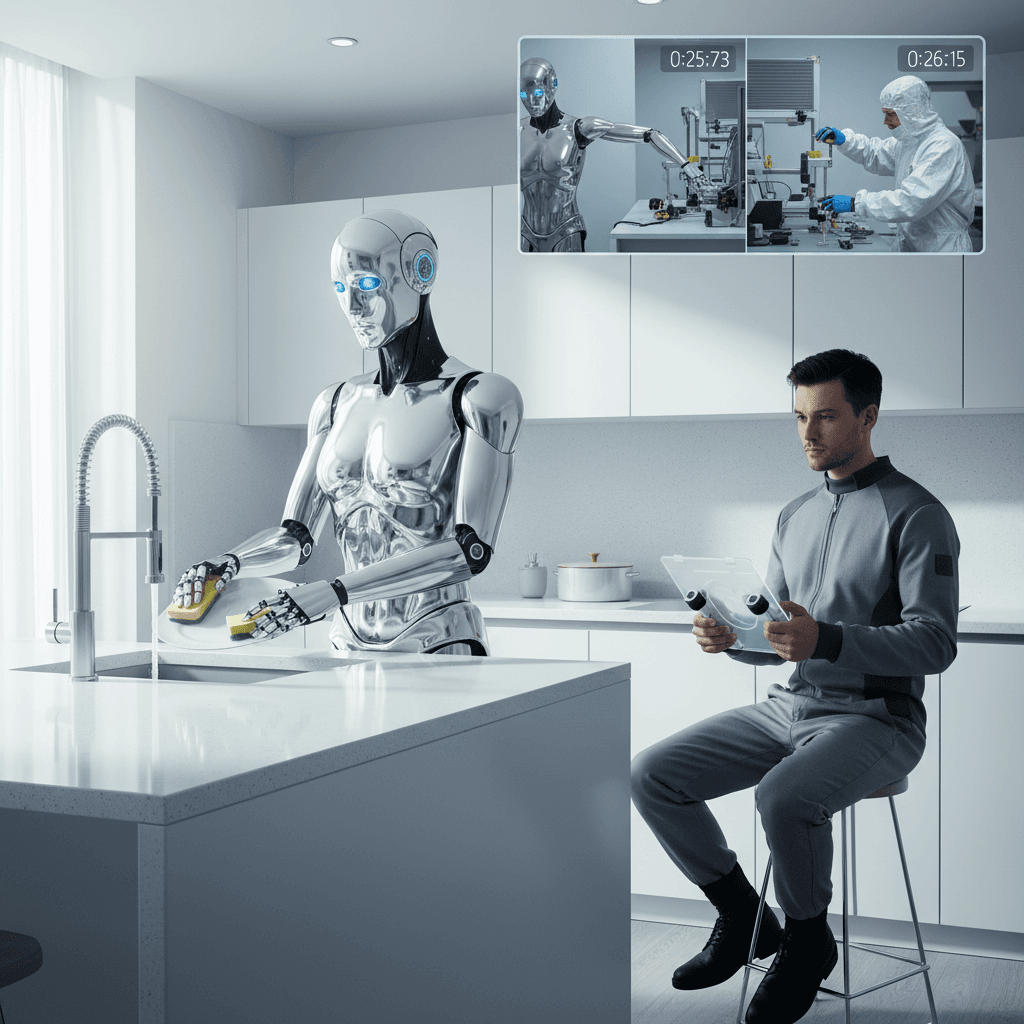

Robots that wash dishes and spar while learning aren't sci‑fi anymore—they're a signal that the biggest bottleneck in robotics, robot training data, is finally cracking. In recent demos, a human teleoperated a humanoid robot in real time, and the robot learned from those actions. That's a seismic shift for anyone planning 2026 automation roadmaps.

Why does this matter now? As we head into year‑end planning and a holiday labor crunch, every operations leader is hunting for reliable automation that actually improves quality, not just speed. Today's post breaks down what teleoperation means for data, where AI agents beat humans and where they still fail, how a new wave of health AI assistants could change patient experiences, and the practical playbook to capture value—safely.

The Real Bottleneck: High‑Quality Robot Training Data

Robotics has long been constrained by data—specifically, the lack of diverse, high‑quality demonstrations across messy, real‑world environments. Unlike software agents trained on billions of tokens, robots need sequences of motions, force feedback, and context to generalize from "this sink" to "any sink."

Enter teleoperation. By letting a skilled human control the robot directly, companies like Unitree Robotics can capture task demonstrations quickly and safely. In recent showcases, a G1 humanoid executed household chores and physical drills under human guidance—turning living‑room complexity into learning material. Instead of relying solely on synthetic data or curated lab tasks, teams can build large corpora of real interactions, complete with edge cases that matter in production.

What changes with this approach:

- Faster data generation: One expert can produce dozens to hundreds of high‑fidelity demonstrations per day.

- Better generalization: Data spans real objects, layouts, and lighting conditions—not just lab setups.

- Safer iteration: The human supervises difficult steps while the robot gradually takes over.

Teleoperation reframes data collection from "rare and expensive" to "continuous and scalable"—the foundation for reliable autonomy.

From Teleoperation to Autonomy: How the Pipeline Works

1) Capture demonstrations

Operators guide the robot using wearables or handheld controllers. The system records trajectories, sensor streams, and contextual cues. Think of each run as a mini‑curriculum: pick up plate, align with sink, apply force, rinse, place on rack.

2) Learn from imitation (safely)

The first lift comes from behavior cloning—teaching policies to reproduce human actions. Safety layers constrain motion, torque, and speed. As confidence grows, the robot executes sub‑tasks autonomously while the operator intercedes only on tricky steps.

3) Rehearse and refine

Teams mix real demonstrations with simulated practice and self‑evaluation. World models, domain randomization, and curriculum learning help the robot generalize beyond the original room, objects, or lighting.

4) Deploy, monitor, and expand

In production, the robot logs successes, failures, and near misses. Those logs feed the next training cycle, creating a self‑improving loop. Over time, you shift from "pilot tasks" to a reusable skills library: opening doors, sorting items, loading machines, or basic hospitality tasks.

Actionable steps for operations teams:

- Select 2–3 high‑variance tasks where human demos add the most value (e.g., kitchen cleanup, bin picking in variable inventory).

- Define acceptance criteria up front: time to completion, first‑pass yield, rework rate, and safety events.

- Plan for data governance: who can record, where data lives, how you anonymize people and spaces.

- Budget for iteration cycles, not one‑off trials—teleoperation pays off as you accumulate diverse demonstrations.

AI Agents vs Humans: Fast Hands, Fuzzy Judgment

Early tests suggest AI agents can finish knowledge work tasks up to 88% faster than humans—but they still lag on quality when context is nuanced or requirements shift mid‑task. That mirrors patterns we've seen across customer support, research synthesis, and report generation.

Why speed outruns quality:

- Agents optimize for completion, not comprehension; instructions that are implicit to humans can be invisible to models.

- They struggle with evolving goals, cross‑system dependencies, and tacit knowledge held by teams.

- Quality assurance is often bolted on after the fact instead of designed into the workflow.

How to deploy agents without eroding quality:

- Start with "precision‑tolerant" tasks: routing, summarizing, data normalization, and draft generation.

- Codify acceptance criteria: define what "good" looks like and embed checks before outputs hit production.

- Keep a human‑in‑the‑loop for exception handling and final QA on high‑stakes tasks.

- Instrument everything: track time saved, rework rate, escalation frequency, customer satisfaction, and error cost.

- Iterate prompts and policies weekly; small tweaks compound into big quality gains.

Speed is the headline—quality is the moat. Treat agent workflows as living systems with governance, not gadgets.

Health AI Assistants: Promise, Pitfalls, and a Pragmatic Path

A new wave of health AI assistants is emerging to help with intake, triage, education, and care navigation. The upside is real: shorter wait times, clearer instructions, and better follow‑through between visits. But the stakes are high, and safety must be designed in from day one.

High‑value use cases:

- Intake and triage: capture symptoms, history, and risk flags before the clinician visit.

- Navigation: guide patients through benefits, referrals, and follow‑up logistics.

- Education: personalize post‑visit instructions and medication reminders.

Risk and compliance guardrails:

- Guard data like PHI: adopt minimal‑necessary data flows, access controls, and audit trails.

- Demand evidence: require evaluation on representative cases, not cherry‑picked demos.

- Human oversight: ensure clinicians review high‑risk recommendations and that the system clearly signals uncertainty.

- Bias checks: regularly test for disparities across demographics and conditions.

Procurement checklist:

- What's the validated accuracy across your top 20 visit reasons?

- How are hallucinations detected, blocked, and escalated?

- Can you trace every suggestion back to sources or clinical rationales?

- What's the total cost of ownership including integration, risk, and monitoring?

Culture Check: Tools Don't Replace Mastery

Cultural moments—like public figures leaning on tools such as ChatGPT to shortcut study—are reminders that AI accelerates learning only when fundamentals are solid. For teams, that means:

- Use AI to practice, not to skip practice. Drafts, drills, and feedback loops beat copy‑paste.

- Make expertise visible. Document playbooks, edge cases, and decision criteria so agents and robots learn the right habits.

- Reward quality, not just throughput. Align incentives with outcomes that matter to customers.

Your 90‑Day Playbook to Capture the Upside

Week 1–2: Prioritize

- Pick two repeatable tasks for agents and one physical task for teleoperation‑led robotics.

- Define KPIs: time saved, first‑pass yield, escalation rate, error cost, safety events.

Week 3–6: Pilot

- Stand up agent workflows with staged QA gates.

- Run teleoperation sessions to collect 100–300 demos of your target task.

- Create a data governance plan and a rollback/safety protocol.

Week 7–10: Evaluate and Tune

- Compare agents vs. humans on speed, quality, and customer impact.

- Train initial policies from demonstrations and measure generalization across environments.

- Tighten prompts, acceptance criteria, and safety constraints.

Week 11–13: Scale

- Automate low‑risk sub‑tasks; keep human oversight on high‑stakes steps.

- Expand the demonstration corpus to new objects, layouts, and edge cases.

- Publish an internal scorecard monthly; socialize wins and lessons.

What This Means for 2026 Roadmaps

- Convergence is here: humanoid robots trained via teleoperation plus software agents will co‑pilot operations in kitchens, warehouses, clinics, and front offices.

- Cost curves improve as you grow your dataset. Data is a compounding asset—treat it like one.

- The winners pair speed with trust: measurable quality, transparent governance, and human oversight where it counts.

Conclusion: From Demos to Durable Advantage

Robot training data is the lever that turns clever demos into dependable autonomy. Teleoperation accelerates data collection while preserving safety, and disciplined agent workflows turn "88% faster" into better customer outcomes, not just more output.

If you're building next year's plan, start with one physical task and one agent workflow. Set clear KPIs, invest in data governance, and iterate weekly. Want a shortcut? Request a strategy session with our team and get a tailored roadmap for your use case.

The question now isn't whether robots and agents can help—it's how quickly you can turn their speed into quality your customers can feel.