LLMs boost productivity—but at a high energy and water cost. Learn how to design AI workflows and infrastructure that stay powerful while remaining sustainable.

AI's Hidden Cost: Making LLMs More Sustainable

As AI tools and large language models (LLMs) become part of everyday work, a quieter story is unfolding behind the scenes: training and running these models consumes enormous amounts of energy and water.

For entrepreneurs, creators, and professionals who rely on AI to boost productivity, this raises an uncomfortable question: Can we work smarter with AI without putting unsustainable pressure on the planet? In other words, can we enjoy the benefits of AI and Technology at work without ignoring their environmental cost?

In this post, we'll unpack the environmental footprint of LLMs in plain language, explain why energy and water usage are rising so quickly, and—most importantly—outline practical steps you and your organization can take to use AI more responsibly. This isn't about rejecting AI; it's about designing AI-powered productivity with sustainability in mind.

The Dark Side of LLMs: Why Energy and Water Matter

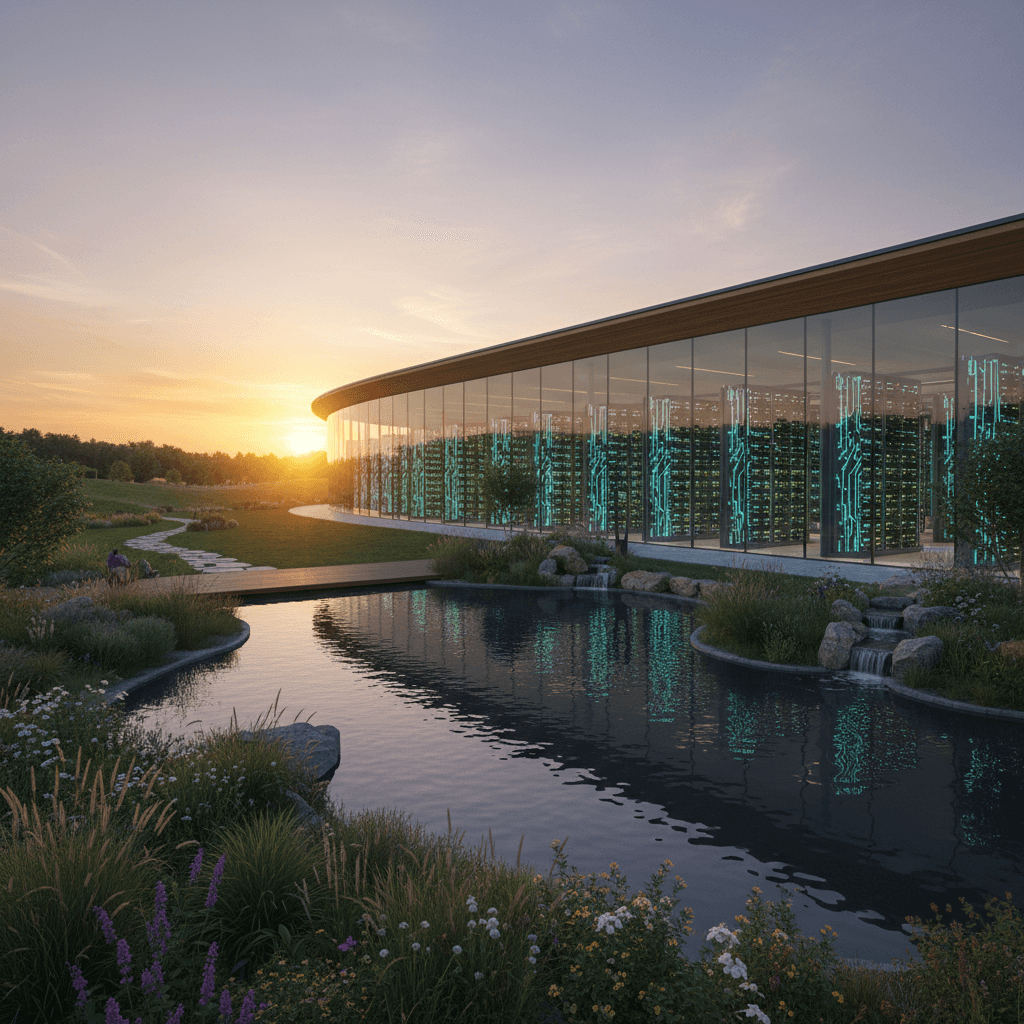

LLMs feel weightless: you type a prompt, and an answer appears in seconds. But under the surface, every query runs on vast data center infrastructure—servers, storage, networking, cooling systems, and power delivery.

Two resources are at the center of the debate:

- Energy – to power the GPUs/TPUs that train and run AI models

- Water – to cool servers and manage heat in data centers

How AI Training and Inference Use Resources

There are two major phases in the life of an AI model:

-

Training

- Involves feeding massive datasets into GPU clusters.

- Can run for weeks or months, consuming huge amounts of electricity.

- Generates substantial heat, requiring intensive cooling, often via water-based systems.

-

Inference (day-to-day use)

- Every time you generate a response, summarize a document, or analyze data with an LLM, you're triggering inference.

- Individually, each query seems small, but at global scale, millions to billions of daily requests create a continuous energy and cooling load.

For knowledge workers, this means that your new AI-powered productivity stack—chatbots, summarizers, copilots, and automations—has an invisible "resource meter" constantly running in the background.

Productivity gains from AI are real, but so is the environmental bill they generate.

Why Resource Use is Climbing So Fast

AI and Technology adoption has grown faster than almost any previous enterprise tool. Several trends are driving the steep rise in energy and water demands.

Bigger Models, Bigger Footprints

- LLMs have rapidly increased in parameter count (the internal weights that make them powerful).

- Larger models typically require:

- More computation to train

- More memory and compute per query during inference

- As organizations chase higher accuracy and "smarter" behavior, model size often grows faster than efficiency improvements.

Always-On AI in the Workplace

In 2025, AI is no longer a side experiment. It's embedded into:

- Email drafting and scheduling

- Sales outreach and customer support

- Code generation and QA

- Document summarization and knowledge search

These AI assistants run constantly. Even "small" tasks—rewriting an email, polishing a proposal—add up when thousands of employees do them daily.

Data Center Cooling and Water Usage

To keep servers from overheating, data centers rely on cooling systems, which often use large volumes of water. In many facilities:

- Water is used in evaporative cooling towers.

- Hot air is passed through systems where water absorbs the heat and evaporates.

When AI loads spike, more servers run at higher utilization, and cooling systems must work harder, increasing water consumption.

This is why local communities and regulators are starting to question massive new AI data centers, especially in regions already facing water stress or fragile power grids.

Inside the AI Stack: Where You Can Reduce Impact

The good news: you don't need to be a data center operator to influence AI sustainability. From IT strategy to day-to-day workflow choices, there are levers at every layer of the stack.

1. Infrastructure and Energy Strategy

If you have influence over IT, cloud, or data strategy, think in terms of where and how your AI workloads run.

Key approaches:

-

Choose greener regions and providers

- Run AI workloads in regions with higher shares of renewable energy.

- Prioritize vendors that publish their energy and water usage and show progress toward sustainability targets.

-

Shift non-urgent workloads in time

- Schedule model training, re-training, or heavy analytics during periods of lower grid demand or higher renewable availability.

- This is particularly effective for batch training or large back-office AI jobs.

-

Embrace hardware efficiency

- Modern GPUs, custom AI accelerators, and efficient CPUs can perform more work per watt.

- When negotiating or selecting vendors, consider not just price and speed, but energy efficiency per unit of compute.

2. Smarter Storage and Data Management

Large, always-hot data lakes dramatically increase the cost of AI.

Here's where concepts like active archiving, data tiering, and storage optimization come in:

-

Active archiving

- Move rarely accessed data to lower-cost, lower-energy storage tiers.

- Keep only frequently used data on high-performance disks.

-

Data tiering

- Automatically place data on the most appropriate storage layer (fast/expensive vs. slow/efficient) based on access patterns.

- Reduces power draw from keeping massive volumes of cold data on hot, spinning disks.

-

Data minimization for AI training

- Curate your training sets: more data is not always better.

- High-quality, well-labeled, deduplicated data improves model performance and reduces wasteful compute.

Optimizing data isn't just an infrastructure story—it's a productivity story. Cleaner, better-organized data makes AI tools more accurate, which saves time and reduces unnecessary re-runs and retries.

Designing Sustainable AI Into Your Daily Workflows

Even if you don't own servers or make cloud decisions, the way you use AI at work affects its footprint. Scaling responsible habits across a team or company can be surprisingly powerful.

1. Right-Size the Model for the Task

Not every task needs a massive, general-purpose LLM.

Whenever possible:

- Use smaller, specialized models for routine tasks (classification, keyword extraction, routing, sentiment analysis).

- Reserve large frontier models for complex reasoning, creativity, or high-stakes decisions.

From a productivity standpoint, this also means:

- Faster responses

- Lower cost per query

- Less congestion on shared AI resources

2. Write Better Prompts, Fewer Calls

Inefficient prompting leads to more tokens, more retries, and more wasted compute. Good prompt engineering is not just about quality—it's also about resource efficiency.

Practical habits:

- Be specific: specify format, tone, and constraints in one prompt instead of multiple back-and-forths.

- Reuse prompt templates for recurring tasks (weekly reports, meeting summaries, outreach sequences).

- Limit unnecessary generations: avoid asking for ten variations when you only need one or two.

This aligns perfectly with the series theme: AI should make work more productive with fewer steps, not more.

3. Consolidate Workflows

Instead of:

- Generating an outline

- Then asking for a draft

- Then asking for a summary

- Then asking for a shorter social caption

You can often:

- Request a structured output in one pass:

- "Create: (1) outline, (2) 800-word draft, (3) 150-word summary, (4) 3 social captions."

This reduces the number of AI calls, saving time, cost, and compute while keeping your workflow tight and organized.

4. Set Team Norms Around AI Usage

For teams and organizations, consider a lightweight AI usage policy that balances productivity and sustainability:

- Define which tools to use for which tasks (and which to avoid).

- Encourage employees to:

- Use AI for complex, high-value work first.

- Avoid trivial or novelty prompts on production systems.

- Batch similar requests where possible.

These small cultural shifts can significantly reduce total AI load without sacrificing the benefits of technology in your daily work.

Building a Sustainable AI Strategy for Your Organization

If you're in a leadership, IT, or operations role, you can shape a sustainable AI roadmap that aligns productivity, cost, and environmental responsibility.

Step 1: Audit Your AI Footprint

Start by mapping:

- Which AI tools and platforms your teams are using

- The approximate volume of daily or monthly queries

- Where your models run (regions, providers, on-prem vs. cloud)

- Any available metrics on energy usage, water usage, or carbon intensity from your vendors

You don't need perfect precision. Even rough estimates help identify the biggest levers for change.

Step 2: Align AI Projects with Business Value

Not every AI experiment is worth the resource cost.

Ask of each initiative:

- Does this significantly improve productivity, revenue, or quality?

- Can we right-size the model or approach to reduce resource use?

- Could a simpler form of automation or analytics achieve the same outcome?

Prioritize projects with clear value and efficient architectures.

Step 3: Bake Sustainability into Procurement and Architecture

When selecting AI vendors, infrastructure, or platforms:

- Include sustainability requirements (energy mix, cooling methods, reporting transparency) in RFPs and contracts.

- Favor architectures that:

- Cache common results

- Reuse embeddings and vector indexes

- Use retrieval-augmented generation (RAG) to reduce the need for massive, generic models to "know everything."

Step 4: Educate Your Organization

People are more likely to adopt sustainable behavior when they understand why it matters.

- Share concise explainers on how AI consumes energy and water.

- Offer short internal trainings on efficient prompting and smart AI usage.

- Celebrate teams that ship solutions that are both high-impact and resource-conscious.

This turns sustainability from a constraint into a design principle that drives better, leaner solutions.

Work Smarter With AI—Without Ignoring the Planet

AI is transforming how we work, create, and collaborate. From drafting proposals to automating reports, it's now central to everyday productivity. But the rise of LLMs also brings a responsibility: the smarter our tools become, the smarter we must be about how we use them.

The key takeaways:

- Training and running LLMs consume significant energy and water, especially in data centers.

- Rapid growth in AI usage at work amplifies this impact.

- We can reduce the footprint through infrastructure choices, data management, model selection, efficient prompts, and better workflows.

In the spirit of this AI & Technology series, the goal isn't to step away from AI—it's to align productivity with sustainability. As you design your next AI-powered workflow or rollout, ask:

How can we get the same or better results with fewer resources, fewer calls, and smarter design?

If you start treating sustainability as a core requirement—right alongside speed, cost, and accuracy—you'll not only protect the planet, you'll often discover leaner, more elegant AI solutions that make your work genuinely smarter, not just louder.