OpenAI, AWS, and Google's space datacenters are reshaping AI. Learn what's real, what's hype, and how to build a 90‑day plan that cuts cost and boosts speed.

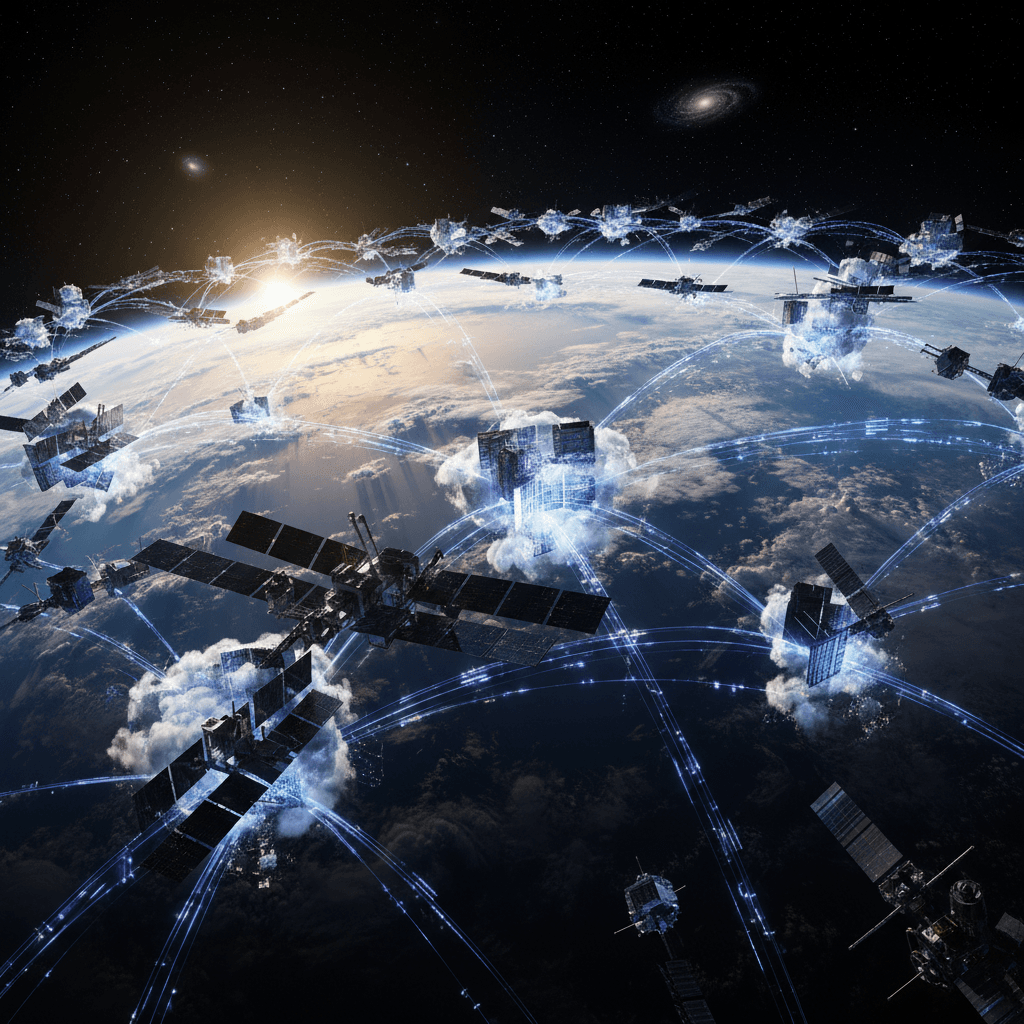

As 2025 winds down, the AI infrastructure race is rewriting the rules of cloud strategy. Reports and industry chatter suggest OpenAI is deepening ties with AWS while maintaining its Microsoft relationship, and Google is exploring orbital computing with the rumored Google Project Suncatcher. If space datacenters sound sci‑fi, remember: the cost of launch is falling, power demand is soaring, and AI training is hungry enough to push compute literally off‑planet.

For leaders finalizing 2026 plans, this matters now. Vendor lock‑in, GPU scarcity, regulatory pressure, and power constraints are converging. In this post, we unpack what OpenAI's multi‑cloud moves signal, how space datacenters might actually work, what "AI layoffs" really mean, and how to translate all of it into a pragmatic playbook for the next 90 days.

Why OpenAI Is Courting AWS While Staying with Microsoft

The headline isn't "breaking free"—it's diversification. OpenAI's dependence on Azure was a fast lane to scale, but 2025's reality favors a portfolio approach. AWS brings a different stack, different incentives, and a hedge against single‑vendor risk. Meanwhile, Microsoft still offers deeply integrated supercomputing and a robust enterprise footprint.

The multi‑cloud calculus

- Cost levers: Training economics depend on GPU/accelerator pricing, queue times, and reserved capacity. AWS's Trainium/Inferentia can lower inference costs for certain architectures, while Azure's premium GPU fleet excels at frontier model training.

- Availability: Access to Nvidia GPUs remains the gating factor for many. Spreading demand across clouds reduces wait time and offers negotiation power.

- Data gravity and egress: Housing data, labeling pipelines, and feature stores near your model training saves money and latency. Multi‑cloud means you position workloads where they run best—not where you started.

What this signals for buyers

- Expect aggressive credits and private pricing from both AWS and Microsoft as they compete for AI workloads.

- Architecture should be "interoperability-first": containerized training jobs, model registries that sync across clouds, and standardized MLOps.

- Negotiate for guaranteed accelerator capacity windows during key training cycles to avoid bottlenecks.

Action step: Run a 2-week bake‑off. Train the same medium‑sized model on two providers with identical hyperparameters and compare throughput, queue time, cost per trained token, and reliability. Use results to anchor 2026 contract terms.

Inside Google's Project Suncatcher: Space Datacenters

Space datacenters sound like marketing, but the physics pencil out in some scenarios. Abundant solar power off‑planet is appealing; cooling is harder but doable with radiators. The real question is not "can we?" but "for which workloads does it win?"

How orbital compute could work

- Power: Solar arrays in orbit avoid night cycles depending on orbit choice, enabling high duty cycles for energy-intensive tasks.

- Thermal management: In vacuum, heat is shed via radiation, not convection. That demands large radiator surfaces but removes the need for water cooling plants.

- Latency: LEO can add tens of milliseconds; GEO adds far more. That favors batch training, rendering, or disaster recovery over interactive inference.

- Backhaul: Laser inter-satellite links and ground stations handle data transfer. Bandwidth and availability will dictate viable use cases.

What could run in space—and what should not

- Good fits: Long‑running training jobs, video rendering, genomics pipelines, and cold storage pre‑processing where time sensitivity is low.

- Poor fits: Real‑time inference for chat, search, or trading, where every millisecond counts and data sovereignty is strict.

Risks and constraints

- Regulatory: Space assets intersect with spectrum, export controls, and cross‑border data rules. Compliance will be complex.

- Reliability: Launch failures, debris, and maintenance are non‑trivial. Redundancy and graceful degradation are essential.

- Cost curve: Launch is cheaper than ever, but still not cheap. The business case is sensitive to utilization and energy prices on Earth.

Action step: Add "orbital compute" to your horizon scanning. It's not a 2025 procurement item for most, but it may influence multi‑year capacity planning and energy strategy, especially for GPU‑intensive R&D.

What "AI Layoffs" Really Mean for 2026 Planning

Layoffs framed as "because AI" often mask a bundle of factors: post‑pandemic over‑hiring, higher interest rates, product pivots, and automation reshaping roles. AI is real, but the narrative is oversimplified. The leaders winning right now are redesigning work, not just cutting headcount.

The operating model shift

- From tasks to workflows: Copilots and agents remove steps but introduce oversight and evaluation. Roles shift toward orchestration.

- Fewer handoffs, more ownership: Teams that embed AI into the flow of work compress cycles and reduce coordination costs.

- Measure the right outcomes: Track cycle time, defect rate, and value delivered per headcount—not just "tickets closed."

A humane and effective talent plan

- Reskill at the edge: Upskill power users in each function as "automation champions." Empower them with lightweight guardrails.

- Redesign roles: Where AI halves a task, don't idle the remaining time—reinvest in higher‑value work like experimentation and customer research.

- Govern lightly: Provide safe data access, prompt standards, and review policies. Over‑locking slows adoption and kills ROI.

Action step: Pilot "AI sprint weeks" in two teams. Set a baseline, embed an assistant (e.g., ChatGPT Go or similar low‑cost tiers), document 3 automated steps, and re‑measure throughput. Keep what moves the needle.

Chips, Power, and Scale: Nvidia, Anthropic, and the Stack

The AI stack is stratifying. Nvidia's latest GPUs remain the gold standard for frontier training, but alternatives are maturing. Anthropic, OpenAI, and others are experimenting with diversified clouds and model distillation to optimize cost and latency.

Hardware reality check

- Nvidia GPUs: H‑class and next‑gen parts are still capacity-constrained and expensive, but they deliver state‑of‑the‑art performance.

- Cloud accelerators: AWS Trainium/Inferentia and Google TPUs are compelling for targeted workloads, especially inference at scale.

- Power as a constraint: Grid capacity and siting are emerging as hard limits. Expect more creative solutions, from on‑site generation to, yes, space.

Model and device trends to watch

- Portfolio of models: Mix frontier closed models with open weights for cost control and privacy. Benchmark often; the gap shifts fast.

- On‑device AI: With 2025/2026 Android flagships adding stronger NPUs, expect "Sora on Android"-style video and creative workflows to move closer to the edge for drafts and previews.

- Entry‑level assistants: Offerings like ChatGPT Go lower the barrier to broad deployment. Favor official programs over gray‑area hacks—using VPNs to bypass terms can create legal and security risks.

Action step: Create a model selection rubric. Score candidates on accuracy, latency, security posture, TCO, and retraining cadence. Re‑run the rubric quarterly.

A Practical 90‑Day Playbook for Q4/Q1

You do not need orbital compute to make material progress. Here's a pragmatic plan to finish 2025 strong and start 2026 faster.

Days 0‑30: Baseline and prove value

- Inventory AI workloads: training, fine‑tuning, RAG, inference, and automation.

- Spin a dual‑cloud POC for one representative workload and capture unit economics.

- Stand up a lightweight governance pack: data access, prompt standards, human‑in‑the‑loop checkpoints.

Days 31‑60: Buy smart and scale pilots

- Negotiate capacity and credits with two providers; request scheduled GPU blocks.

- Launch two "copilot in the flow" pilots (e.g., SDR email drafting, finance reconciliation) with clear before/after metrics.

- Implement FinOps for AI: cost dashboards, allocation by team, and anomaly alerts.

Days 61‑90: Operationalize and train

- Productionize the winning pilot with SLOs and monitoring.

- Establish a skills ladder: prompt engineering basics, evaluation methods, and automation guardrails.

- Lock 2026 contracts using your bake‑off data and capacity forecasts.

Key Questions to Ask Vendors This Month

- Capacity: What GPU/accelerator SKUs and queue times can you guarantee in my windows?

- Economics: What is my effective cost per 1 million trained tokens and per 1,000 inference tokens under reserved terms?

- Portability: How will you support multi‑cloud model registries and artifact portability without egress penalties?

- Compliance: How do you support data residency, audit trails, and model evaluation for regulated workloads?

- Roadmap: What's your 12‑month plan for energy and sustainability—on Earth and beyond?

The Bottom Line

OpenAI's deeper work with AWS alongside Microsoft, Google Project Suncatcher's space datacenters concept, and the mix of Nvidia GPUs and cloud accelerators all point to the same reality: AI infrastructure is fragmenting and scaling at once. The winners won't pick one cloud—they'll pick the right cloud for each job and design for portability from day one.

If you're setting 2026 budgets now, act on what is certain: measure unit economics, negotiate capacity, and redesign work with AI in the loop. Want help? Join our community, subscribe to our daily briefing, or reach out for an AI readiness workshop. Are you architecting for gravity—or for orbit?